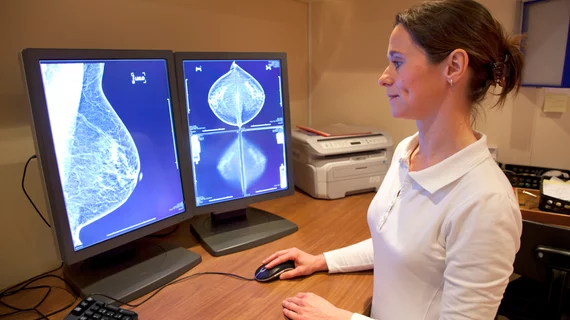

Does AI mean the end for breast radiologists?

Someone had to come right out and ask. Six researchers representing four medical specialties have done so, weighing the odds by reviewing a representative sample of the literature.

Their informed take is running in the open-access journal Cureus.

Their short answer: No. It doesn’t.

The lead author is Lawman Chiwome, an internal medicine physician with the U.K.’s National Health Service. His co-authors, all from the California Institute of Behavioral Neurosciences and Psychology, work in family medicine, internal medicine, anesthesia and neurology.

After offering a useful primer on AI’s general categories and its role to date in breast imaging, the team considers three challenges they believe likely to keep the technology from bumping breast radiologists into the unemployment line.

1. Iffy AI acceptance. Chiwome and colleagues note that radiology has long been a technology-driven specialty. However, it’s not just radiologists who need to buy in to AI’s role in their work.

“There is a need to sensitize [referring physicians] about AI through different channels to make the adoption of AI smooth,” the authors write. “We also need consent from patients to use AI on image interpretation. Patients should be able to choose between AI and humans.”

2. The commonness of insufficient training data. No matter how massive the inputs, image-based training datasets aren’t enough if the data isn’t properly labeled for the training, the authors point out. “Image labeling takes a lot of time and needs a lot of effort, and also, this process must be very robust,” they write.

Also in this category of challenges is the inescapability of rare conditions. Not only are highly unusual findings too few and far between to train algorithms, Chiwome and co-authors write, but nonhuman modes of detection sometimes also mistake image noise and variations for pathologies.

Along those same lines, if image data used in training is “from a different ethnic group, age group or different gender, it may give different results if given raw data from other diverse groups of people.”

3. Looming medicolegal landmines. Here the authors raise the question of who will be held responsible when AI makes an error and harm is done as a result. “Physicians always take responsibility for the medical decisions made for patients,” they write. “In case something went wrong, the programmers may not take responsibility, given that the machines are continuously learning in ways not known by the developers. Recommendations provided by the tool may need to be ratified by a radiologist, who may agree or disagree with the software.

“We predict a future where radiologists will continue to make strides and draw many benefits from the use of more sophisticated AI systems over the next 10 years,” Chiwome et al. conclude. “If you are interested in breast radiology, from medical students to radiologists, anticipate a more satisfying and rewarding career, especially if you have skills in programming.”

The study is available in full for free.