Gottlieb: Let’s not slap the label ‘medical device’ on AI software that only helps clinicians make care decisions

The 21st Century Cures Act exempted most software used purely for clinical decision support (CDS) from FDA oversight. That was in 2016. Six years later, the agency rethought the move, issuing guidance that made it harder for CDS software sellers to earn such exemptions.

The 2022 guidance reflected concern over physicians deferring to CDS recommendations when pressed for time or uncertain of their own judgments. Whenever the potential exists for this scenario to play out, FDA suggested at the time, the software should be regulated as a device and thus subjected to premarket review.

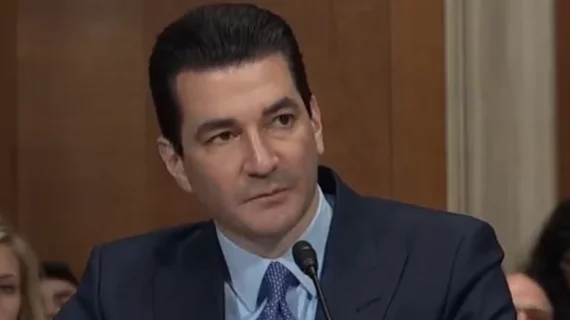

In an opinion piece published Feb. 6 in JAMA Health Forum, a former FDA commissioner argues the 2022 criteria is ripe for a re-think.

Returning to the intent of the 21st Century Cures Act and the policies advanced from 2017 to 2019, writes Scott Gottlieb, MD, the FDA would regulate CDS software “based on how the data analysis is presented to healthcare clinicians instead of focusing on how clinicians would use the information to inform their judgment.”

AI tools that merely inform or advise clinicians—and make no attempt to autonomously diagnose conditions or direct treatments—“should not be subjected to premarket review,” he contends.

Gottlieb pays particular attention to artificial intelligence (AI) software designed to integrate with EMR systems. Such software, he points out, can “generate insights that might otherwise go unnoticed by clinicians.”

Instead of regulating such software packages as medical devices, he suggests, why not have the FDA allow EMR suppliers to bring these tools to market as long as they meet FDA criteria for how the tools are designed and validated?

“[B]y drawing on real-world evidence of these systems in action in the post-market setting, the agency can verify that they genuinely enhance the quality of medical decision-making,” Gottlieb writes.

More:

‘If these tools are classified as medical devices merely because they draw from multiple data sources or possess analytical capabilities that are so comprehensive and intelligent that clinicians are likely to accept their analyses in full, then nearly any AI tool embedded in an EMR could fall under regulation. The risk is that EMR developers may attempt to circumvent regulatory uncertainty by omitting these features from their software. This could deny clinicians access to AI tools that have the potential to transform the productivity and safety of medical care.’

Gottlieb was appointed FDA head by President Donald Trump in 2017 and served in that role until April 2019.

Hear him out on AI-aided CDS here.