Deep-learning algorithm matches, outperforms radiologists in screening chest X-rays

A deep-learning algorithm was significantly faster and just as accurate as most radiologists in analyzing chest X-rays for several diseases, according to a study led by Stanford University researchers. Based on the findings, radiologists may soon be able to interpret chest X-rays and diagnose diseases for patients at a much quicker pace.

“Usually, we see AI algorithms that can detect a brain hemorrhage or a wrist fracture—a very narrow scope for single-use cases,” Matthew Lungren, MD, MPH, an assistant professor of radiology at Stanford, said in a statement. “But here we’re talking about 14 different pathologies analyzed simultaneously, and it’s all through one algorithm.”

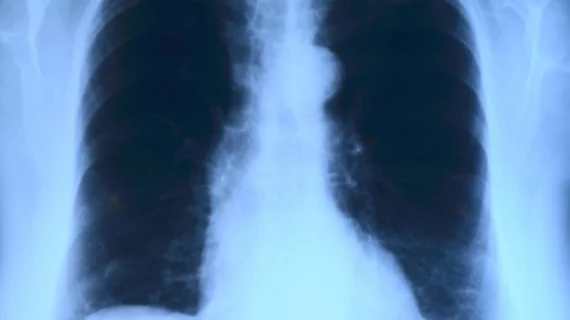

Stanford researchers developed a convolutional neural network, called the CheXNeXt, to detect the presence of 14 different pathologies, including pneumonia, pleural effusion, pulmonary masses and chest X-rays, according to the study published in PLOS Medicine. The deep-learning algorithm was trained and validated on a set of 420 images. Its performance was later compared to nine radiologists using the area under the receiver operating characteristic curve (AUC), which illustrates the accuracy and diagnostic ability of a binary classifier system.

The algorithm achieved a radiologist-level performance on 11 pathologies, while being outperformed by radiologists on three pathologies, according to the study. In those three instances, the radiologists achieved a significantly higher AUC performance on cardiomegaly, emphysema and hiatal hernia with AUCs of 0.888, 0.911 and 0.985, respectively. The deep-learning algorithm’s AUCs were 0.831, 0.704 and 0.851, respectively.

However, the algorithm performed significantly better than radiologists in detecting atelectasis. It achieved an AUC of 0.862, while radiologists achieved an AUC was 0.808. There were no significant differences in the AUCs of the other 10 pathologies, the study stated.

Radiologists also took significantly longer to interpret the 420 images. Radiologists interpreted the images in a total of 240 minutes, while CheXNeXt did so in just 1.5 minutes.

Chest X-ray interpretation is critical for the detection of thoracic diseases, like tuberculosis and lung cancer, according to researchers. However, the task is typically consuming and requires expert radiologists to read the images.

Researchers believe the technology has the potential to “improve healthcare delivery and increase access to chest radiograph expertise for the detection of a variety of acute diseases” with further study. They are now working to improve the algorithm and hoping to conduct in-clinic testing.

“We’re seeking opportunities to get our algorithm trained and validated in a variety of settings to explore both its strengths and blind spots,” Stanford graduate student Pranav Rajpurkar said in a statement. “The algorithm has evaluated over 100,000 X-rays so far, but now we want to know how well it would do if we showed it a million X-rays—and not just from one hospital, but from hospitals around the world.”